Entropy

Entropy[edit | edit source]

Entropy is a measure of the disorder or a measure of progression towards maximum disorder, called thermodynamic equilibrium, which signifies the number of ways in which a thermodynamic system can be arranged. In accordance to the second law of thermodynamics the entropy in an isolated system never decreases because isolated systems spontaneously evolve toward the state of maximum entropy which is called thermodynamic equilibrium. It is a state function, meaning that it is a property of a system that is dependent on the current state of the system and not on the way in which the system acquired the current state.

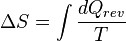

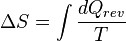

This is the original definition of entropy, and is also the macroscopic definition because it doesn't take into account the microscopic components of the system. In this equation S is the entropy of the system, T is the thermodynamic temperature of a closed system and dQ is a transfer of heat into the system that is reversible, hence the rev.

Entropy of a System[edit | edit source]

Thermodynamic entropy is very important for both physics and chemistry. Thermodynamic entropy is a non-conserved state function 1. Entropy is a concept that evolved from the explanation of the spontaneity of reactions and not of their reversible counterpart2. An increase in entropy is an irreversible change in the system, and this is why the arrow of entropy is said to have the same direction as the arrow of time.

History[edit | edit source]

It was introduced in 1850, by Rudolf Clausius3. It was one of the important breakthroughs in physics during the 19th century. Building on this, Ludwig Boltzmann, Josiah Willard Gibbs, and James Clerk Maxwell added a statistical basis to entropy. This led to the creation of Maxwell-Boltzmann distribution graphs, which are widely used in chemistry in the 21st century.

Carnot Cycle[edit | edit source]

Carnot's principle signifies that work can only be done when there is a temperature difference4. Following Carnot's principle it also shows that work should be some function of the difference in temperature and the heat absorbed. Rudolf Clausius' discovery arose from his study of Carnot's principle and Carnot cycles. Using the Joule's suggestion that the Carnot function could be the temperature, measured from zero, Clausius thought that at each stage of the cycle, work and and heat would not be equal and that the difference between the two was a state function that would disappear upon completion. This state function was called internal energy and it became the first law of thermodynamics.

Classical Thermodynamics[edit | edit source]

The term entropy was created in 1865 and was defined as "an extensive thermodynamic variable shown to be useful in characterizing the Carnot cycle." Classical thermodynamics is mainly used to measure the entropy of an isolated system in thermodynamic equilibrium.

For reversible cyclic processes:

This means the integral  is path independent, meaning it is a state function, and allows us to define Entropy as a state function (S) that fulfils:

is path independent, meaning it is a state function, and allows us to define Entropy as a state function (S) that fulfils:

Entropy Concepts[edit | edit source]

- Entropy provides a way to state the second law of thermodynamics and to define temperature.

- Entropy can be defined by

and a change in the system results in Clausius inequality, but has zero change in a Carnot cycle.

and a change in the system results in Clausius inequality, but has zero change in a Carnot cycle. - Entropy is a state function and can be calculated for an ideal gas and an Einstein solid.

- Entropy can be visualised in terms of disorder or time's arrow, since entropy cannot decrease

References:

- McGraw-Hill Concise Encyclopedia of Chemistry, 2004

- McQuarrie D. A., Simon J. D., Physical Chemistry: A Molecular Approach, University Science Books, Sausalito 1997 p. 817

- Encylopaedia Britanica, "Entropy", September 28 2006, <http://www.britannica.com/EBchecked/topic/189035/entropy>

- B. H. Lavenda, "A New Perspective on Thermodynamics" Springer, 2009, Sec. 2.3.4,